Publié le 19 mai 2014

L’Europe est un territoire de production ancien aux industries cinématographiques très inégalement dynamiques : le cinéma français surprend par son abondance et sa diversité, mais n’atteint pas de véritable masse critique internationale ; le cinéma britannique est plus fragile mais produit pourtant de gros succès internationaux avec l’aide des studios américains ; certains producteurs traditionnels, comme l’Italie, ont vu leur niveau de production chuter depuis les années 1980 en l’absence de régulation du secteur ; ailleurs enfin, la production est très irrégulière et surtout tournée vers le marché national... La diffusion du cinéma européen en Europe reste faible, tandis que les marchés sont dominés par le cinéma américain. Plusieurs types de dispositifs nationaux soutiennent les industries nationales, tandis qu’une certaine compétition fiscale règne pour attirer les projets internationaux. Dans ce cadre, l’Union européenne apporte un soutien aux différentes étapes de la production mais a pu dans le même temps menacer les dispositifs publics de financement de la filière.

Le cinéma européen, un enjeu culturel et économique majeur

L’existence, l’autonomie, et la puissance d’un éventuel cinéma proprement européen sont des questions stratégiques qui touchent à bien des domaines. C’est d’abord un enjeu économique : les industries créatives sont désormais reconnues comme une part importante de l’économie des pays développés, elles créent des emplois et de la richesse. Comment peut-on alors maintenir ce tissu industriel sur un territoire, l’entretenir, le développer ? C’est évidemment une question artistique : les cinéastes européens ont-ils les moyens de faire le cinéma qu’ils souhaitent ? C’est donc aussi une question éminemment politique : comment garder en main nos propres moyens d’expression culturelle ? La démocratie, à l’échelle nationale comme supranationale, a besoin d’idées, de création, de confrontation de points de vue, y compris artistiques, et justifie ce que l’on a coutume d’appeler « l’exception culturelle », c’est-à-dire la d&eaceacute;fense de la culture comme domaine distinct des autres secteurs dans les accords internationaux et politiques publiques. C’est enfin une question géopolitique : l’Europe culturelle peut-elle exister indépendamment de ses États d’une part, et des États-Unis d’autre part ? Si l’Union européenne veut fonctionner autrement que comme un marché unique, si une plus grande intégration culturelle continentale est souhaitable, il serait bon d’encourager une plus grande vitalité de la création cinématographique, et surtout une plus grande circulation des œuvres entre pays de l’Union, et au-delà.

Un grand marché dominé par le cinéma américain

L’Europe constitue un marché important pour les films de cinéma : 933 millions de tickets ont été vendus en 2012. Rapporté à la population, cela représente une moyenne de 1,8 entrée par habitant par an, contre 4,1 d’entrées par habitant aux États-Unis, malgré de fortes disparités (3,3 entrées par habitant en France contre 0,9 entrées par habitant en Grèce). Le cinéma américain domine, avec 63 % de parts de marché, suivi par le cinéma européen (33 % de parts de marché, mais dominé dans chaque pays par les films nationaux). En réalité, dans chaque pays le cinéma étranger d’origine européenne représente moins de 10 % des entrées ! Le cinéma français est le premier cinéma européen vu hors de son pays, suivi par le cinéma britannique.

Des niveaux de production inégaux selon les pays

L’Union européenne reste un territoire de production important : 1 299 films y ont été produits en 2012, contre 819 films aux États-Unis (en 2011). Mais ces films ont en moyenne des budgets bien inférieurs et connaissent un rayonnement bien moindre que leurs concurrents américains. La France est le pays qui produit le plus de films en Europe (279 films en 2012) ; l’Allemagne et l’Italie suivent avec 154 et 134 produits ; le Royaume-Uni produit moins (103 films) mais est un partenaire de coproduction pour le cinéma américain tourné hors d’Hollywood et dont les films bénéficient d’un plus grand rayonnement. Les pays ont chacun des systèmes de soutien public au financement du cinéma complexes et différents en termes de budget, de périmètre et d’échelon de décision.

Des marchés et industries inégalement dynamiques

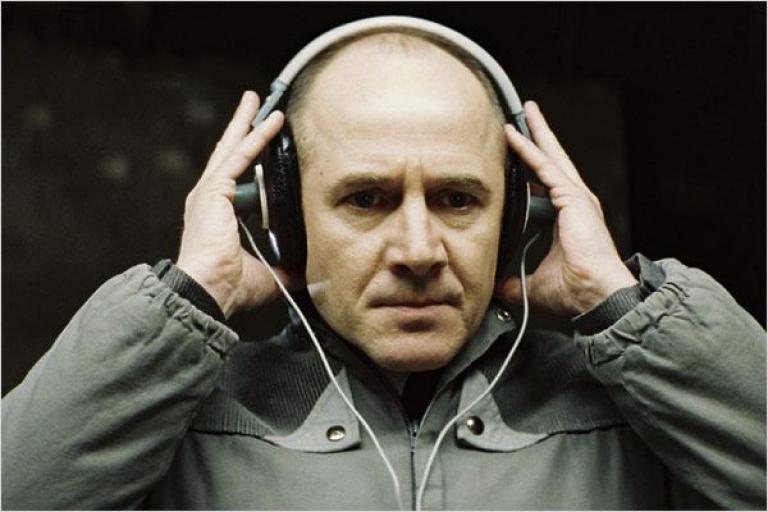

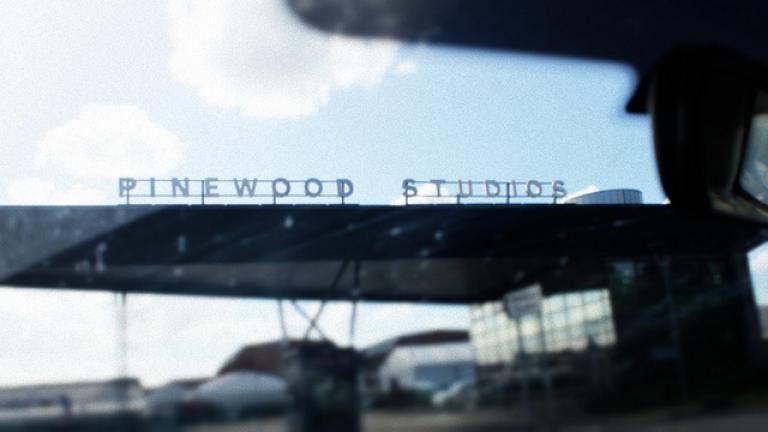

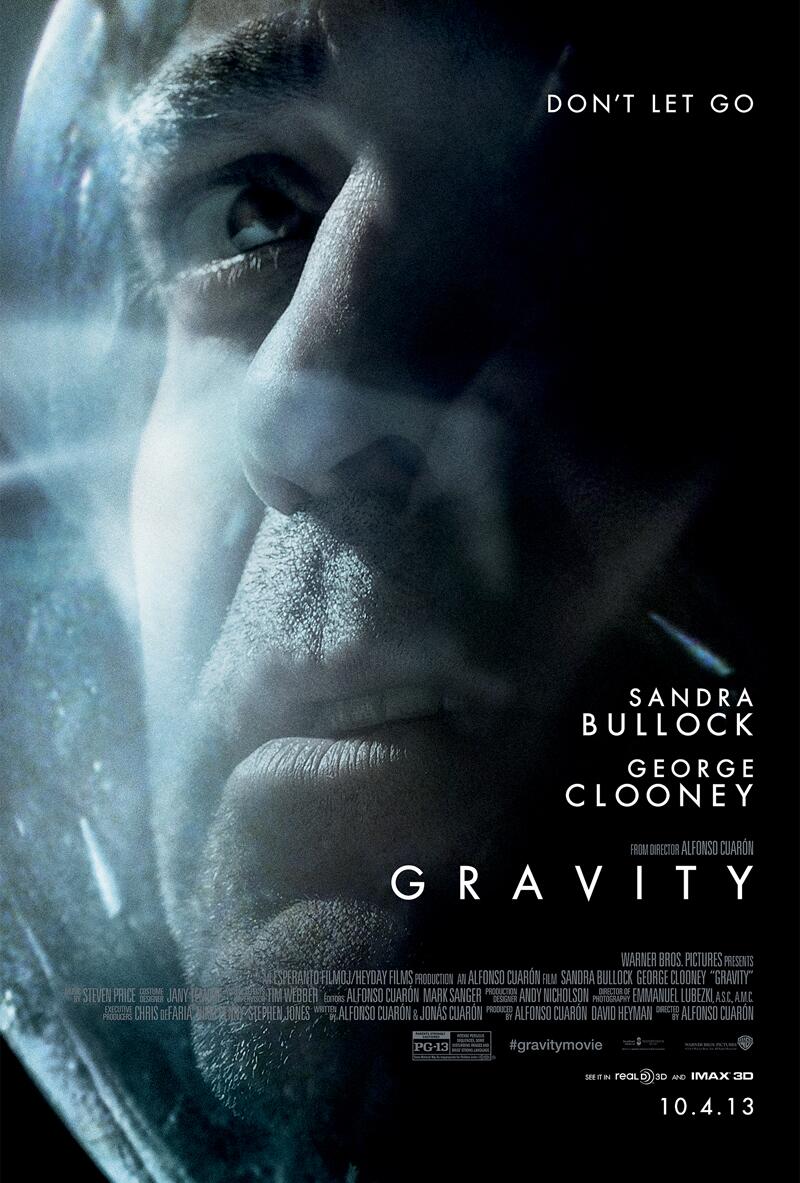

Le cinéma européen souffre aujourd’hui de plusieurs maux : faible production cinématographique dans  de nombreux pays, faible circulation des œuvres entre pays, faible rayonnement extra-européen. La France se distingue cependant par le nombre particulièrement élevé de ses productions, de ses écrans, et de sa fréquentation ; elle est aussi un des seuls pays où les cinématographies d’autres pays européens sont accessibles, et nombreux sont les auteurs qui viennent y produire ou coproduire leurs films (Amour, de l’Autrichien Michael Haneke, récompensé d’une Palme d’or en 2011, a été produit et tourné en France). La défense - victorieuse - de l’exception culturelle auprès de la Commission européenne, au printemps dernier, a été amorcée depuis la France par de puissants acteurs de ce système. D’un autre côté, on ne peut que constater l’écrasant succès d’un autre cinéma européen, international, produit au Royaume-Uni en étroite collaboration avec Hollywood, qui viendrait comme troubler la perception que l’on peut se faire du cinéma européen. Skyfall (2012), Harry Potter (2001-2011), Gravity (2013) sont-ils des films européens ? Ils sont pourtant le produit d’une société britannique, EON Production, ou d’un producteur londonien, David Heyman… Et pour deux d’entre eux, le sujet lui-même est britannique, donc européen. De plus, force est de constater que ce modèle britannique tend à se répandre en Europe : la production de Inglourious Basterds (Tarantino, 2009) ou encore de The Grand Budapest Hotel (Wes Anderson, 2014) ont eu lieu en Allemagne (dans les Studios Babelsberg à Berlin), et celle de Die Hard 4 en Hongrie (dans les Studios Origo à Budapest).

de nombreux pays, faible circulation des œuvres entre pays, faible rayonnement extra-européen. La France se distingue cependant par le nombre particulièrement élevé de ses productions, de ses écrans, et de sa fréquentation ; elle est aussi un des seuls pays où les cinématographies d’autres pays européens sont accessibles, et nombreux sont les auteurs qui viennent y produire ou coproduire leurs films (Amour, de l’Autrichien Michael Haneke, récompensé d’une Palme d’or en 2011, a été produit et tourné en France). La défense - victorieuse - de l’exception culturelle auprès de la Commission européenne, au printemps dernier, a été amorcée depuis la France par de puissants acteurs de ce système. D’un autre côté, on ne peut que constater l’écrasant succès d’un autre cinéma européen, international, produit au Royaume-Uni en étroite collaboration avec Hollywood, qui viendrait comme troubler la perception que l’on peut se faire du cinéma européen. Skyfall (2012), Harry Potter (2001-2011), Gravity (2013) sont-ils des films européens ? Ils sont pourtant le produit d’une société britannique, EON Production, ou d’un producteur londonien, David Heyman… Et pour deux d’entre eux, le sujet lui-même est britannique, donc européen. De plus, force est de constater que ce modèle britannique tend à se répandre en Europe : la production de Inglourious Basterds (Tarantino, 2009) ou encore de The Grand Budapest Hotel (Wes Anderson, 2014) ont eu lieu en Allemagne (dans les Studios Babelsberg à Berlin), et celle de Die Hard 4 en Hongrie (dans les Studios Origo à Budapest).

de nombreux pays, faible circulation des œuvres entre pays, faible rayonnement extra-européen. La France se distingue cependant par le nombre particulièrement élevé de ses productions, de ses écrans, et de sa fréquentation ; elle est aussi un des seuls pays où les cinématographies d’autres pays européens sont accessibles, et nombreux sont les auteurs qui viennent y produire ou coproduire leurs films (Amour, de l’Autrichien Michael Haneke, récompensé d’une Palme d’or en 2011, a été produit et tourné en France). La défense - victorieuse - de l’exception culturelle auprès de la Commission européenne, au printemps dernier, a été amorcée depuis la France par de puissants acteurs de ce système. D’un autre côté, on ne peut que constater l’écrasant succès d’un autre cinéma européen, international, produit au Royaume-Uni en étroite collaboration avec Hollywood, qui viendrait comme troubler la perception que l’on peut se faire du cinéma européen. Skyfall (2012), Harry Potter (2001-2011), Gravity (2013) sont-ils des films européens ? Ils sont pourtant le produit d’une société britannique, EON Production, ou d’un producteur londonien, David Heyman… Et pour deux d’entre eux, le sujet lui-même est britannique, donc européen. De plus, force est de constater que ce modèle britannique tend à se répandre en Europe : la production de Inglourious Basterds (Tarantino, 2009) ou encore de The Grand Budapest Hotel (Wes Anderson, 2014) ont eu lieu en Allemagne (dans les Studios Babelsberg à Berlin), et celle de Die Hard 4 en Hongrie (dans les Studios Origo à Budapest).

de nombreux pays, faible circulation des œuvres entre pays, faible rayonnement extra-européen. La France se distingue cependant par le nombre particulièrement élevé de ses productions, de ses écrans, et de sa fréquentation ; elle est aussi un des seuls pays où les cinématographies d’autres pays européens sont accessibles, et nombreux sont les auteurs qui viennent y produire ou coproduire leurs films (Amour, de l’Autrichien Michael Haneke, récompensé d’une Palme d’or en 2011, a été produit et tourné en France). La défense - victorieuse - de l’exception culturelle auprès de la Commission européenne, au printemps dernier, a été amorcée depuis la France par de puissants acteurs de ce système. D’un autre côté, on ne peut que constater l’écrasant succès d’un autre cinéma européen, international, produit au Royaume-Uni en étroite collaboration avec Hollywood, qui viendrait comme troubler la perception que l’on peut se faire du cinéma européen. Skyfall (2012), Harry Potter (2001-2011), Gravity (2013) sont-ils des films européens ? Ils sont pourtant le produit d’une société britannique, EON Production, ou d’un producteur londonien, David Heyman… Et pour deux d’entre eux, le sujet lui-même est britannique, donc européen. De plus, force est de constater que ce modèle britannique tend à se répandre en Europe : la production de Inglourious Basterds (Tarantino, 2009) ou encore de The Grand Budapest Hotel (Wes Anderson, 2014) ont eu lieu en Allemagne (dans les Studios Babelsberg à Berlin), et celle de Die Hard 4 en Hongrie (dans les Studios Origo à Budapest).Un programme pour soutenir toutes les étapes de l’industrie du cinéma européenne

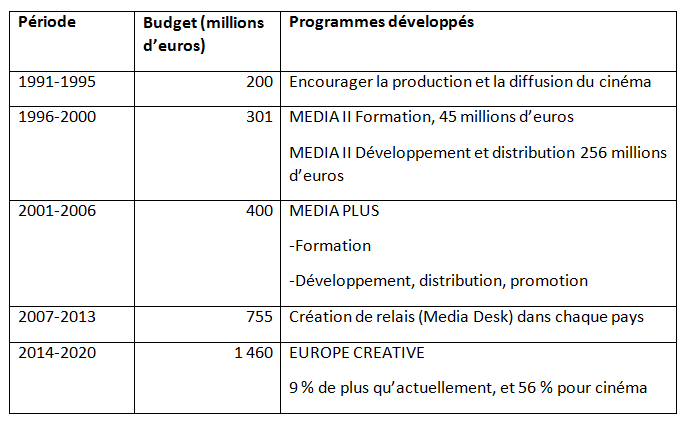

Le soutien à la culture n’a jamais été une priorité européenne. La première forme de soutien apparaît seulement en 1988 sous l’impulsion du Conseil de l’Europe (organe extérieur à l’Union européenne): c’est un fonds de soutien à la coproduction, qui deviendra par la suite EURIMAGES. Au sein de l’Union, une série d’études et de travaux préparent à la fin des années 1980 le programme MEDIA (Mesures pour encourager le développement de l’industrie audiovisuelle), proposé par la Commission européenne et acté par le Conseil de l’Union européenne en 1990. Ce programme a depuis été enrichi et s’intitule depuis janvier 2014 Europe CREATIVE.

Le soutien à la culture n’a jamais été une priorité européenne. La première forme de soutien apparaît seulement en 1988 sous l’impulsion du Conseil de l’Europe (organe extérieur à l’Union européenne): c’est un fonds de soutien à la coproduction, qui deviendra par la suite EURIMAGES. Au sein de l’Union, une série d’études et de travaux préparent à la fin des années 1980 le programme MEDIA (Mesures pour encourager le développement de l’industrie audiovisuelle), proposé par la Commission européenne et acté par le Conseil de l’Union européenne en 1990. Ce programme a depuis été enrichi et s’intitule depuis janvier 2014 Europe CREATIVE.Le premier programme MEDIA est donc lancé en 1991 afin d’encourager le développement, la distribution et la vente des films européens en dehors de leur pays d’origine. Il fonctionne aujourd’hui dans les 28 États membres de l’Union ainsi que dans d’autres pays comme la Norvège, l’Islande, ou la Suisse. Le programme est adopté pour cinq à sept ans : MEDIA I de 1991 à 1995, MEDIA II de 1996 à 2000, MEDIA Plus et MEDIA Formation de 2001 à 2006, et MEDIA 2007 de 2007 à 2013. Pour la période 2014-2020, le programme MEDIA fusionne avec le programme MEDIA Mundus et se voit ajouter des compétences pour d’autres industries culturelles dans le cadre d’Europe CREATIVE.

Les budgets ne sont pas très importants, rapportés à d’autres secteurs soutenus pour l’Union, mais croissent d’année en année. Ils s’appliquent à toutes les étapes de la chaîne de production, avec un accent net porté sur l’aide à la distribution.

- Soutenir la formation

- Soutenir la production

Une petite partie du budget du programme est accordée au soutien au développement des projets et à la production d’œuvres à destination des salles de cinéma, de la télévision, ou de plateformes numériques. Les critères d’éligibilité des dossiers sont nombreux, pour des sommes entre 30k€ et 60k€ pour les projetsisolés, et entre 50k et 200k€ pour les catalogues de projets (slate funding : trois projets élus dans le même dossier), dans une limite de 50 % du budget. Le budget total de soutien à la production est en 2014 de 17,5 millions d’euros. Les critères d’élection sont nombreux :

« Les propositions sont évaluées par des experts indépendants. Le soutien est accordé aux candidatures ayant obtenu le plus grand nombre de points sur la base de critères relatifs aux projets soumis : qualité du projet et potentiel de distribution européenne (50 %), qualité de la stratégie de développement (10 %), qualité de la stratégie de distribution et de marketing (20 %), expérience, potentiel, adéquation de l’équipe créative (10 %) et qualité de la stratégie de financement / faisabilité du projet (10 %). Des points supplémentaires seront automatiquement accordés aux projets destinés à une jeune audience (<16 ans ; 10 points), aux projets coproduits avec un pays européen ne partageant pas la même langue que le candidat (5 points) et aux projets originaires des pays à faible capacité de production (10 points). »

Le programme CREATIVE soutient également la production d’œuvres télévisuelles, à la condition qu’au moins trois diffuseurs de trois pays européens participent à son financement, pour un budget maximal de 12,5 % du budget, plafonné à 500K€ (pour la fiction et l’animation). En 2014, le budget alloué à ce programme est de 11,8 millions d’euros.

Pour les producteurs, ce programme constitue un élément supplémentaire non négligeable dans les mécanismes de financement.

- Soutenir la distribution

Le programme Europe Creative soutient la distribution selon trois modalités : un soutien automatique, un soutien sélectif et un soutien aux agents de vente.

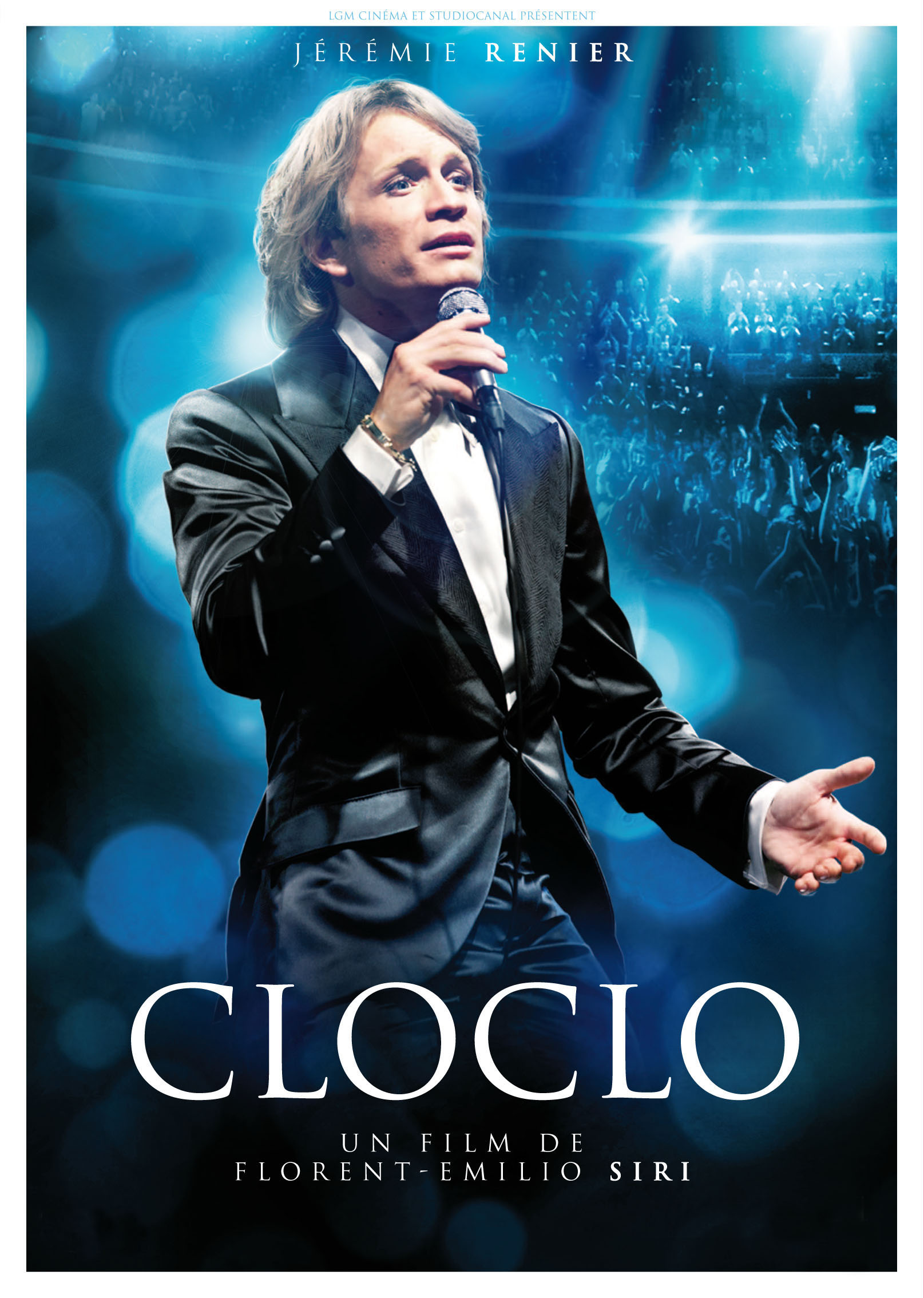

Le soutien automatique vise à encourager les distributeurs européens à investir dans la distribution ou la coproduction de films européens non nationaux. Le montant de l’aide est généré par les entrées payantes réalisées par des films européens non nationaux récents, hors de leur territoire d’origine, au cours de l’année civile antérieure. Il doit être réinvesti dans la coproduction de films européens non nationaux ou dans leur distribution (en minimum garanti ou simples frais de sortie)(1). Ainsi, les distributeurs de cinéma européens en Europe sont encouragés à reproduire leur investissement dans des films non-nationaux l’année suivante. Le soutien automatique est indexé sur les succès passés, majoré en France de 150 % sous 70 00 entrées, minoré à 35 % entre 300 000 et 600 000 entrées, et plafonné à 600 000 entrées. L’aide représentait en 2013 une enveloppe totale de 20 millions d’euros. Ainsi en 2012, sur la base des recettes de 2011, 27 distributeurs allemands se sont partagés 3,3 millions d’euros de soutien automatique, dont NFP Neue Film Produktion (0,5k€), StudioCanal (0,5k€ pour Populaire, Sammy 2, Vous n’avez encore rien vu, La Taupe, CloClo, Sans identité…) et X Verleih ag (0,3k€)…

Le soutien automatique vise à encourager les distributeurs européens à investir dans la distribution ou la coproduction de films européens non nationaux. Le montant de l’aide est généré par les entrées payantes réalisées par des films européens non nationaux récents, hors de leur territoire d’origine, au cours de l’année civile antérieure. Il doit être réinvesti dans la coproduction de films européens non nationaux ou dans leur distribution (en minimum garanti ou simples frais de sortie)(1). Ainsi, les distributeurs de cinéma européens en Europe sont encouragés à reproduire leur investissement dans des films non-nationaux l’année suivante. Le soutien automatique est indexé sur les succès passés, majoré en France de 150 % sous 70 00 entrées, minoré à 35 % entre 300 000 et 600 000 entrées, et plafonné à 600 000 entrées. L’aide représentait en 2013 une enveloppe totale de 20 millions d’euros. Ainsi en 2012, sur la base des recettes de 2011, 27 distributeurs allemands se sont partagés 3,3 millions d’euros de soutien automatique, dont NFP Neue Film Produktion (0,5k€), StudioCanal (0,5k€ pour Populaire, Sammy 2, Vous n’avez encore rien vu, La Taupe, CloClo, Sans identité…) et X Verleih ag (0,3k€)…Le soutien sélectif encourage les distributeurs à investir dans la promotion et la distribution de films européens non-nationaux en les aidant à financer les frais de publicité et de promotion, de fabrication et de circulation des copies. Peuvent déposer une demande :les groupements d’au moins 7 distributeurs européens, coordonnés par un agent de vente internationale, qui assurent la distribution, hors de leur territoire d’origine, d’un film européen. Mais les films allemands, espagnols, français, italiens et britanniques dont le budget de production est supérieur à 10 millions d’euros ne sont pas éligibles. Ont ainsi été soutenus en 2013 Soda Pictures Limited et Arthaus Stiftelsen for Filmkunst pour la distribution du film allemand Hannah Arendt respectivement au Royaume-Uni et en Norvège : ils ont reçu 13k€ et 10k€. Le montant total de cette aide est de 8 millions d’euros, distribués en fonction du territoire de sortie et du nombre d’écrans.

Le soutien aux agents de vente est destiné à encourager et à soutenir une diffusion transnationale plus large des films européens en soutenant financièrement les agents de vente internationale impliqués dans leur distribution. Un fonds potentiel est généré et déterminé par l’activité de l’agent, sur un nombre défini de territoires et calculé sur une période de référence et sur un pourcentage du soutien automatique généré par le film concerné. Pour devenir effectif, ce soutien doit être réinvesti dans des minima garantis ou des frais de promotion sur de nouveaux films européens, dans la limite de 50 % des coûts. Ce fonds a par exemple soutenu en 2012 Les Films du Losange à hauteur de 30k€, StudioCanal pour 86k€, Wild Bunch pour 200k€.

Enfin, la distribution du cinéma européen hors d’Europe est soutenue par le programme MEDIA MUNDUS.

Enfin, la distribution du cinéma européen hors d’Europe est soutenue par le programme MEDIA MUNDUS.

- Soutenir l'exploitation en salle et sur internet

La plus grosse part du budget d’Europe Creative revient au soutien à l’exploitation du cinéma européen dans les salles européennes et à la numérisation du parc de salles.

L’association Europa Cinemas est l’intermédiaire entre MEDIA et les salles européennes qui acceptent de diffuser. Elle distribue ainsi environ 10M millions d’euros par an à un réseau de salles qui s’engagent à diffuser plus de films européens non nationaux. L’objectif affiché est triple : accroître la programmation et la fréquentation des salles, promouvoir des initiatives à destination des jeunes publics, et favoriser la diversité de l’offre des salles.

L’exploitation sur d’autres formes de canaux est aussi soutenue, en particulier les canaux digitaux. Universciné, la plateforme française de vidéo à la demande, a ainsi reçu 750k€ en 2012 et acquiert une stratégie véritablement européenne, en coopération avec d’autres acteurs européens et à travers la plateforme EuroVOD. Curzon, le réseau indépendant de salles britanniques, a également lancé son service de vidéo à la demande Curzon-on-Demand et a reçu de MEDIA 400k€ en 2012.- Promouvoir le cinéma européen en Europe et dans le monde

Le Conseil de l’Europe et le programme EURIMAGES

Eurimages est, depuis 1988, le Fonds du Conseil de l’Europe pour l’aide à la coproduction, à la distribution et à l’exploitation d’œuvres cinématographiques européennes. 35 États sont membres de ce fonds. Il dispose d’un budget annuel de 25millions d’euros, et a soutenu depuis sa création plus de 1 500 coproductions européennes pour un montant d'environ 468 millions d’euros. Il propose quatre programmes de soutien : à la coproduction, à la distribution, à l’exploitation et à l’équipement des salles. Le soutien d’Eurimages se fait soit par une avance sur recettes (pour le soutien à la coproduction) soit par une subvention (dans les autres cas). Eurimages a ainsi soutenu en 2012 Le passé, d’Asghar Farhadi (Iran-France), Nymphomaniac, de Lars Von Trier (Danemark), The Cut de Fatih Akin (Allemagne) ou encore L’Écume des Jours de Michel Gondry (France) – chacun à hauteur de plus de 500k€.

Eurimages est, depuis 1988, le Fonds du Conseil de l’Europe pour l’aide à la coproduction, à la distribution et à l’exploitation d’œuvres cinématographiques européennes. 35 États sont membres de ce fonds. Il dispose d’un budget annuel de 25millions d’euros, et a soutenu depuis sa création plus de 1 500 coproductions européennes pour un montant d'environ 468 millions d’euros. Il propose quatre programmes de soutien : à la coproduction, à la distribution, à l’exploitation et à l’équipement des salles. Le soutien d’Eurimages se fait soit par une avance sur recettes (pour le soutien à la coproduction) soit par une subvention (dans les autres cas). Eurimages a ainsi soutenu en 2012 Le passé, d’Asghar Farhadi (Iran-France), Nymphomaniac, de Lars Von Trier (Danemark), The Cut de Fatih Akin (Allemagne) ou encore L’Écume des Jours de Michel Gondry (France) – chacun à hauteur de plus de 500k€. Primer et labelliser le cinéma européen

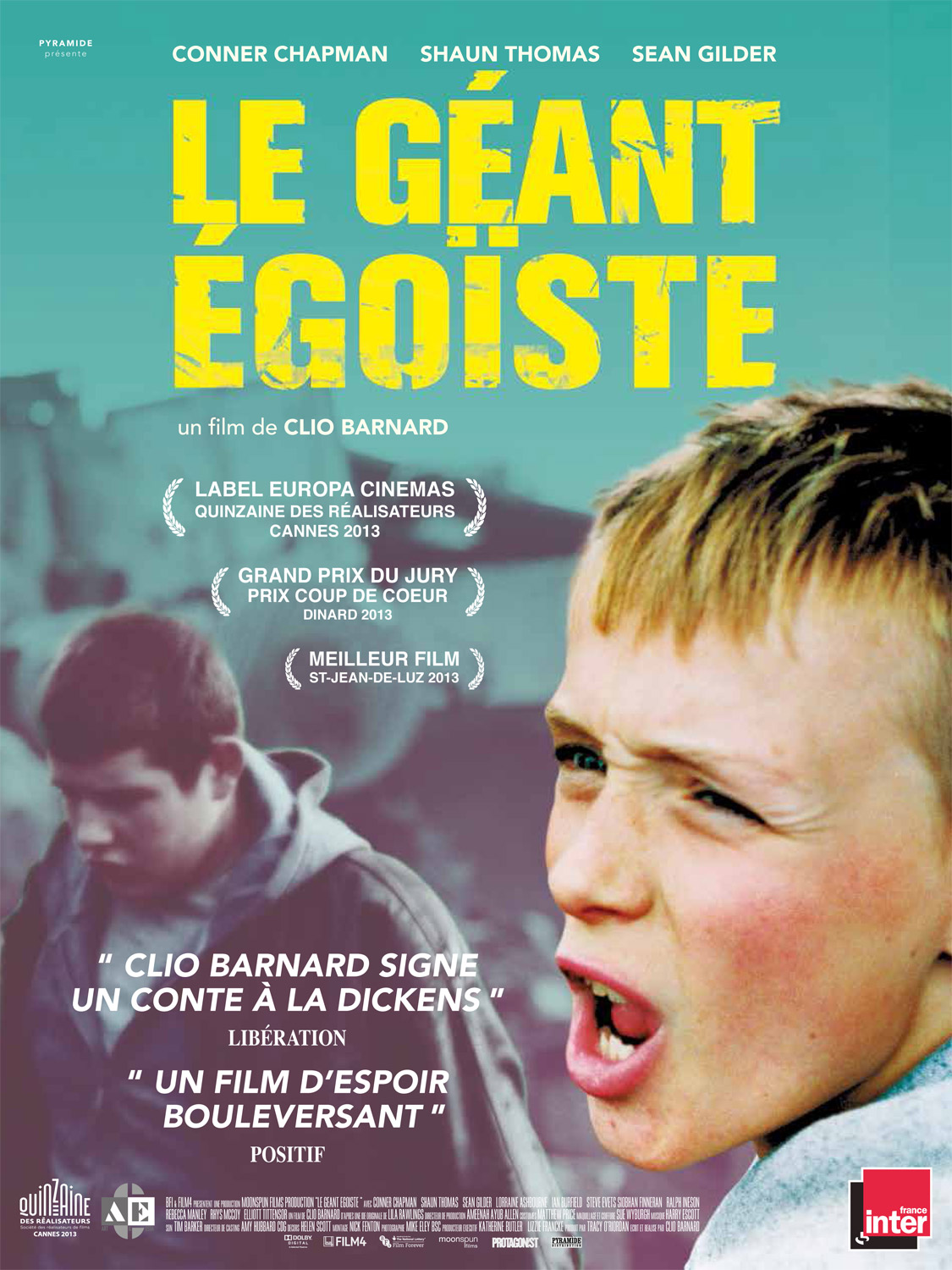

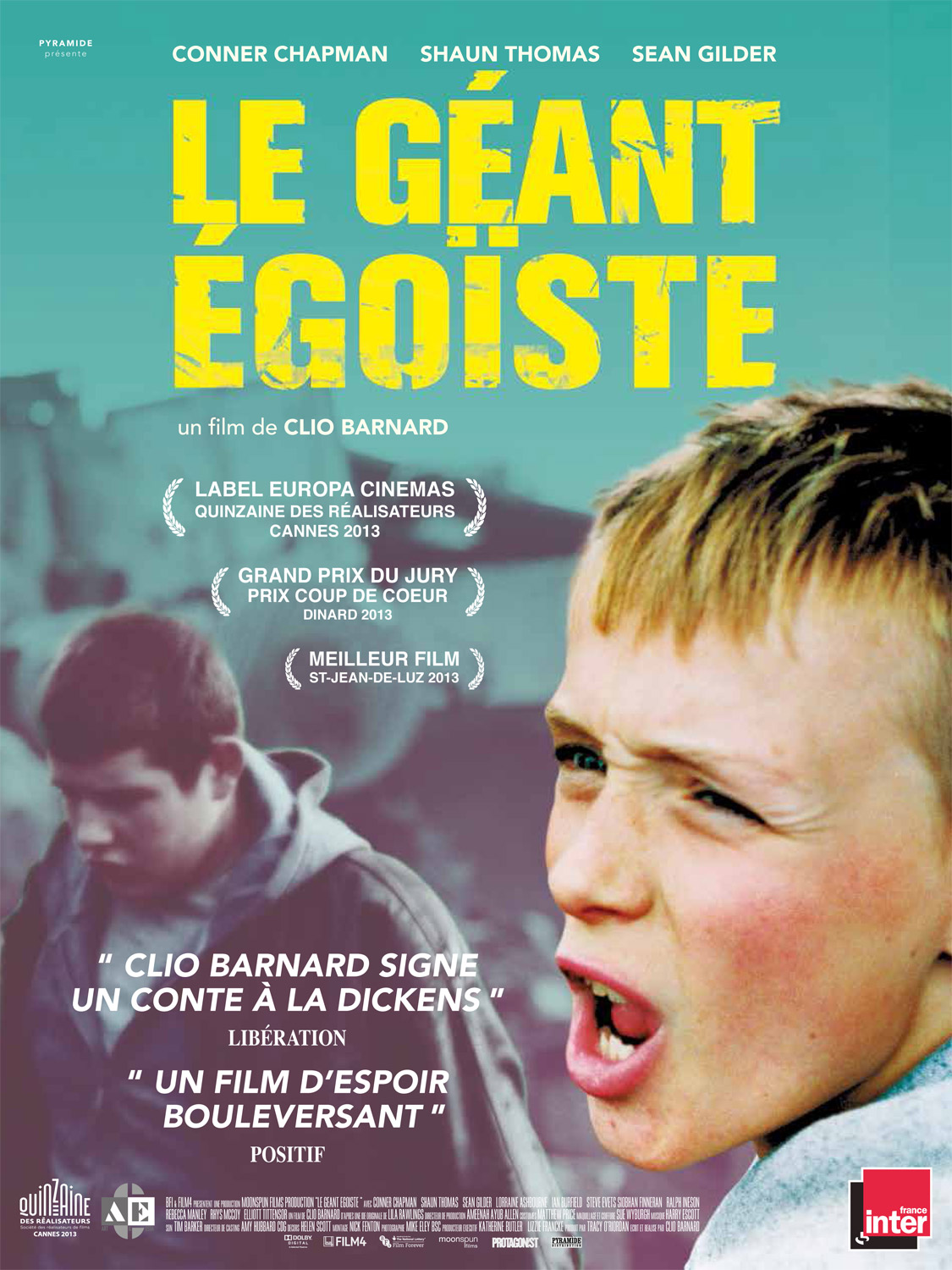

En dehors des festivals internationaux (Cannes, Venise, Berlin), qui ne prêtent pas d’attention à la  dimension européenne des films, et des cérémonies nationales (Césars, BAFTA…), deux institutions européennes priment le cinéma européen : d’une part le Parlement européen, avec le prix LUX, et d’autre part l’Académie européenne du cinéma, association basée à Berlin, et équivalent de l’Academy of Motion Picture Arts and Sciences aux États-Unis. Le Parlement européen décerne le Prix LUX à un film européen remarquable élu par les députés européens pour sa qualité cinématographique et son illustration de l'universalité des valeurs européennes, de la diversité culturelle et du processus de construction continentale. Le Parlement accompagne également la circulation des films sélectionnés dans plusieurs villes européennes durant tout l’automne précédant le choix du vainqueur (en décembre). En 2013, le film britannique A Selfish Giant, l’italien Miele, et le belge The Broken Circle Breakdown étaient sélectionnés, et le film belge a reçu le prix LUX. Le film primé reçoit aussi un soutien pour être sous-titré dans les 23 langues officielles de l’Union européenne.

dimension européenne des films, et des cérémonies nationales (Césars, BAFTA…), deux institutions européennes priment le cinéma européen : d’une part le Parlement européen, avec le prix LUX, et d’autre part l’Académie européenne du cinéma, association basée à Berlin, et équivalent de l’Academy of Motion Picture Arts and Sciences aux États-Unis. Le Parlement européen décerne le Prix LUX à un film européen remarquable élu par les députés européens pour sa qualité cinématographique et son illustration de l'universalité des valeurs européennes, de la diversité culturelle et du processus de construction continentale. Le Parlement accompagne également la circulation des films sélectionnés dans plusieurs villes européennes durant tout l’automne précédant le choix du vainqueur (en décembre). En 2013, le film britannique A Selfish Giant, l’italien Miele, et le belge The Broken Circle Breakdown étaient sélectionnés, et le film belge a reçu le prix LUX. Le film primé reçoit aussi un soutien pour être sous-titré dans les 23 langues officielles de l’Union européenne.

dimension européenne des films, et des cérémonies nationales (Césars, BAFTA…), deux institutions européennes priment le cinéma européen : d’une part le Parlement européen, avec le prix LUX, et d’autre part l’Académie européenne du cinéma, association basée à Berlin, et équivalent de l’Academy of Motion Picture Arts and Sciences aux États-Unis. Le Parlement européen décerne le Prix LUX à un film européen remarquable élu par les députés européens pour sa qualité cinématographique et son illustration de l'universalité des valeurs européennes, de la diversité culturelle et du processus de construction continentale. Le Parlement accompagne également la circulation des films sélectionnés dans plusieurs villes européennes durant tout l’automne précédant le choix du vainqueur (en décembre). En 2013, le film britannique A Selfish Giant, l’italien Miele, et le belge The Broken Circle Breakdown étaient sélectionnés, et le film belge a reçu le prix LUX. Le film primé reçoit aussi un soutien pour être sous-titré dans les 23 langues officielles de l’Union européenne.

dimension européenne des films, et des cérémonies nationales (Césars, BAFTA…), deux institutions européennes priment le cinéma européen : d’une part le Parlement européen, avec le prix LUX, et d’autre part l’Académie européenne du cinéma, association basée à Berlin, et équivalent de l’Academy of Motion Picture Arts and Sciences aux États-Unis. Le Parlement européen décerne le Prix LUX à un film européen remarquable élu par les députés européens pour sa qualité cinématographique et son illustration de l'universalité des valeurs européennes, de la diversité culturelle et du processus de construction continentale. Le Parlement accompagne également la circulation des films sélectionnés dans plusieurs villes européennes durant tout l’automne précédant le choix du vainqueur (en décembre). En 2013, le film britannique A Selfish Giant, l’italien Miele, et le belge The Broken Circle Breakdown étaient sélectionnés, et le film belge a reçu le prix LUX. Le film primé reçoit aussi un soutien pour être sous-titré dans les 23 langues officielles de l’Union européenne.L’Union européenne est-elle vraiment une menace pour le cinéma européen ?

Au-delà des programmes spécifiques qu’elle finance, l’Union européenne joue un rôle de régulateur qui peut poser problème pour les politiques nationales qui n’entrent pas dans le schéma global défendu. Plusieurs débats ont ainsi agité l’industrie du cinéma européenne en 2013 : d’une part, les préparatifs des négociations de libre-échange entre les États-Unis et l’Europe, menés par le Commissaire européen au commerce Karel de Gucht en mars, ont remis en question le principe de l’exception culturelle et menacé le fragile équilibre européen d’une poussée massive du cinéma américain ; d’autre part, le texte Communication Cinéma aurait pu déstabiliser les systèmes d’aides nationaux en les déconnectant de la localisation des dépenses.

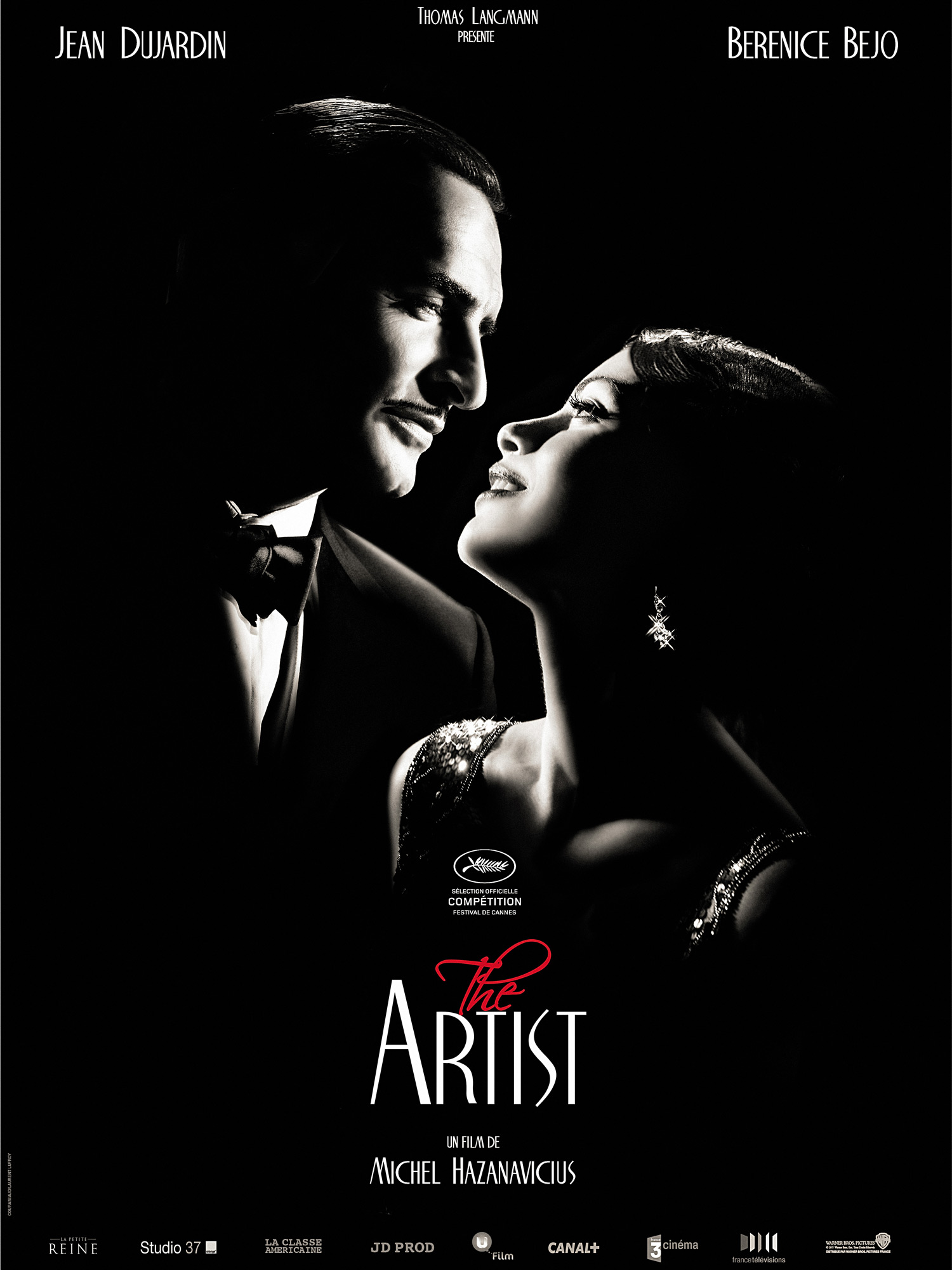

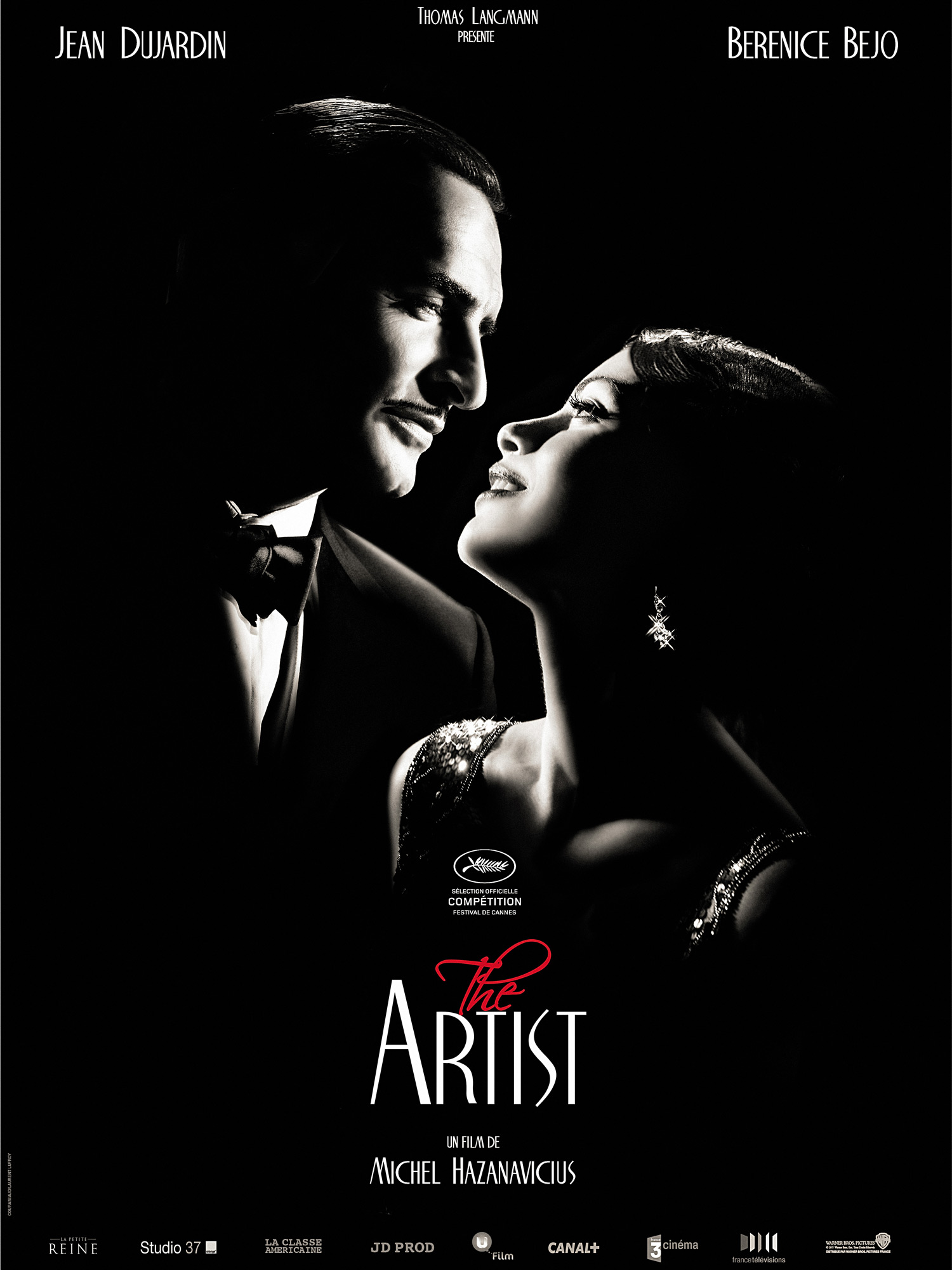

Contre le premier enjeu, un grand nombre de personnalités de l’industrie cinématographique  européenne se sont mobilisées. Une pétition contre l’intégration de l’industrie du cinéma aux accords de libre-échange, « L’exception culturelle n’est pas négociable », est ainsi signée par une quarantaine de cinéastes européens, comme les réalisateurs Costa Gavras, Radu Mihaileanu, Stephen Frears, Ken Loach, Luca Belvaux, Michel Hazanavicius, Dariusz Jablonski, Cristian Mungiu, Daniele Luchetti, et l’actrice Bérénice Béjo - autrement dit des personnalités respectées du milieu, dont les films ont connu d’importants succès (en particulier The Artist, couronné aux Oscars en 2012). Des personnalités extra-européennes se sont ajoutées au mouvement : l’Américain David Lynch, la Néozélandais Jane Campion, le Brésilien Walter Salles…

européenne se sont mobilisées. Une pétition contre l’intégration de l’industrie du cinéma aux accords de libre-échange, « L’exception culturelle n’est pas négociable », est ainsi signée par une quarantaine de cinéastes européens, comme les réalisateurs Costa Gavras, Radu Mihaileanu, Stephen Frears, Ken Loach, Luca Belvaux, Michel Hazanavicius, Dariusz Jablonski, Cristian Mungiu, Daniele Luchetti, et l’actrice Bérénice Béjo - autrement dit des personnalités respectées du milieu, dont les films ont connu d’importants succès (en particulier The Artist, couronné aux Oscars en 2012). Des personnalités extra-européennes se sont ajoutées au mouvement : l’Américain David Lynch, la Néozélandais Jane Campion, le Brésilien Walter Salles…

En mai, quatorze ministres de la Culture européens demandent l’exclusion du secteur audiovisuel des négociations (les ministres allemand, autrichien, belge, bulgare, chypriote, espagnol, français, hongrois, italien, polonais, portugais, roumain, slovaque et slovène). En plein Festival de Cannes, Steven Spielberg profite de l’ouverture de la cérémonie de remise des prix pour glisser : « L'exception culturelle est le meilleur moyen de préserver la diversité du cinéma». Quelques jours plus tard, lors d’une conférence organisée par le CNC, il ajoute : « L'exception culturelle encourage les réalisateurs à faire des films sur leur propre culture. Nous en avons besoin plus que jamais (…) Le plus important est de préserver l'environnement culturel des films, parce que c'est bon pour les affaires aussi». Eric Garandeau, alors président du CNC, ajoute que le système français soutient même les films étrangers « produits ou coproduits avec la France, en particulier les frères Coen, Amat Escalante, Asghar Farhadi, Valeria Golino, James Gray, Rithy Panh, Lucia Puenzo, Mahamat Saleh Haroun, Hiner Saleem, Paolo Sorrentino»… Les débats sont longs entre le président de la Commission européenne José-Manuel Barroso et les différentes parties prenantes. Le sommet de l’État français entre dans le jeu. Le Premier ministre Jean-Marc Ayrault, déclare ainsi en juin, appuyé par le président :

« La France s'opposera à l'ouverture des négociations si la culture, si les industries culturelles ne sont pas protégées et n'en sont pas exclues. La France ira jusqu'à utiliser son droit de veto politique. C'est notre identité, c'est notre combat. Nous ne sommes pas seuls, mais nous sommes en tête pour le mener avec l'appui de la représentation nationale. »

européenne se sont mobilisées. Une pétition contre l’intégration de l’industrie du cinéma aux accords de libre-échange, « L’exception culturelle n’est pas négociable », est ainsi signée par une quarantaine de cinéastes européens, comme les réalisateurs Costa Gavras, Radu Mihaileanu, Stephen Frears, Ken Loach, Luca Belvaux, Michel Hazanavicius, Dariusz Jablonski, Cristian Mungiu, Daniele Luchetti, et l’actrice Bérénice Béjo - autrement dit des personnalités respectées du milieu, dont les films ont connu d’importants succès (en particulier The Artist, couronné aux Oscars en 2012). Des personnalités extra-européennes se sont ajoutées au mouvement : l’Américain David Lynch, la Néozélandais Jane Campion, le Brésilien Walter Salles…

européenne se sont mobilisées. Une pétition contre l’intégration de l’industrie du cinéma aux accords de libre-échange, « L’exception culturelle n’est pas négociable », est ainsi signée par une quarantaine de cinéastes européens, comme les réalisateurs Costa Gavras, Radu Mihaileanu, Stephen Frears, Ken Loach, Luca Belvaux, Michel Hazanavicius, Dariusz Jablonski, Cristian Mungiu, Daniele Luchetti, et l’actrice Bérénice Béjo - autrement dit des personnalités respectées du milieu, dont les films ont connu d’importants succès (en particulier The Artist, couronné aux Oscars en 2012). Des personnalités extra-européennes se sont ajoutées au mouvement : l’Américain David Lynch, la Néozélandais Jane Campion, le Brésilien Walter Salles…En mai, quatorze ministres de la Culture européens demandent l’exclusion du secteur audiovisuel des négociations (les ministres allemand, autrichien, belge, bulgare, chypriote, espagnol, français, hongrois, italien, polonais, portugais, roumain, slovaque et slovène). En plein Festival de Cannes, Steven Spielberg profite de l’ouverture de la cérémonie de remise des prix pour glisser : « L'exception culturelle est le meilleur moyen de préserver la diversité du cinéma». Quelques jours plus tard, lors d’une conférence organisée par le CNC, il ajoute : « L'exception culturelle encourage les réalisateurs à faire des films sur leur propre culture. Nous en avons besoin plus que jamais (…) Le plus important est de préserver l'environnement culturel des films, parce que c'est bon pour les affaires aussi». Eric Garandeau, alors président du CNC, ajoute que le système français soutient même les films étrangers « produits ou coproduits avec la France, en particulier les frères Coen, Amat Escalante, Asghar Farhadi, Valeria Golino, James Gray, Rithy Panh, Lucia Puenzo, Mahamat Saleh Haroun, Hiner Saleem, Paolo Sorrentino»… Les débats sont longs entre le président de la Commission européenne José-Manuel Barroso et les différentes parties prenantes. Le sommet de l’État français entre dans le jeu. Le Premier ministre Jean-Marc Ayrault, déclare ainsi en juin, appuyé par le président :

« La France s'opposera à l'ouverture des négociations si la culture, si les industries culturelles ne sont pas protégées et n'en sont pas exclues. La France ira jusqu'à utiliser son droit de veto politique. C'est notre identité, c'est notre combat. Nous ne sommes pas seuls, mais nous sommes en tête pour le mener avec l'appui de la représentation nationale. »

Le processus culmine au Parlement européen le 11 juin, où Bérénice Béjo se rend pour représenter son mouvement et lire une lettre du réalisateur Wim Wenders, qui dénonce « cette opération à cœur ouvert de l’Europe, sans anesthésie »… Le 14 juin, la Commission européenne écarte finalement l’audiovisuel du mandat de négociation de l’Union européenne.

Dans un second temps, à l’automne, le débat sur la nouvelle Communication Cinéma a agité le milieu. Le projet consistait à réduire le lien entre aide et territorialisation des dépenses, au nom de la libre concurrence entre Etats européens. Finalement, la nouvelle Communication Cinéma adoptée le 14 novembre laisse la possibilité aux États de territorialiser les aides, au nom de la diversité culturelle « qui requiert la préservation des ressources et du savoir-faire de l'industrie au niveau national ou local » : l’obligation de territorialisation des dépenses est autorisée à hauteur de 160 % du montant de l’aide et pour 80 % maximum des dépenses.

Les politiques européennes en faveur du cinéma ne se substituent donc pas aux politiques nationales, et ne peuvent à elles seules stimuler la production dans des petits pays de l’Union, ni relancer l’industrie de grands pays déclinants (Italie, Espagne…). En revanche, leur support à tous les niveaux permet sans doutes aux industries européennes de se porter un peu mieux et aux films de mieux circuler entre les pays. Bien plus, la défense du modèle français que mènent les professionnels du secteur contre les différentes réformes proposées par Bruxelles semblent conforter le système français dans son rôle de modèle.

--

--

Crédits photo :

La Commission européenne (TP Com / Flickr)

La Commission européenne (TP Com / Flickr)

Autres épisodes de la série

Cinéma européen : état des lieux - épisode 331/9

Le cinéma britannique : entre modèle européen et empire américain

Le succès du modèle de production britannique, très intégré au système hollywoodien, interroge l’avenir des industries du cinéma en Europe et les stratégies de développement qui s’offrent à elles.Cinéma européen : état des lieux - épisode 329/9

Comment voir plus de films européens en Europe ?

Les films européens ne représentent qu’une petite part du cinéma consommé en Europe : moins capitalisés et d’abord tournés vers leur marché national, ils ne bénéficient pas de la force de frappe de leurs concurrents hollywoodiens.

Cinéma européen : état des lieux - épisode /9

Comment les métropoles européennes se disputent le cinéma

Les villes européennes se livrent une concurrence acharnée pour attirer les productions cinématographiques sur leur territoire. Retombées économiques et rayonnement sont les enjeux qui motivent ce combat.